World News

World news, global politics, business, technology, and culture—stay updated with breaking stories, international trends, and major events. Get the latest from The Thaiger, your trusted source for global news.

-

Thai masseuse in Germany sued for providing ‘special service’ without consent

A Thai masseuse in Germany has to pay €1,000 in compensation to a male client after she sexually assaulted him by providing “special services” without consent. The complainant, a 26 year old German software engineer, identified only as Ben, reported the sexual assault to police two days after it occurred at a massage shop on Osloer Straße in Berlin. The…

-

Russian man jumps from plane after landing at Bangkok airport

A foreign man in underwear, believed to be a Russian national, caused chaos and jumped off an airplane from an emergency exit door shortly after it landed at Don Mueang International Airport in Bangkok, following a journey from Vietnam. A Thai woman who was travelling on the same flight shared footage of the incident on her TikTok account, @piapornchamsri, on…

-

AOT to raise passenger service charge for international flights from May

Airports of Thailand (AOT) confirmed plans to increase the passenger service charge (PSC) for international departures in May this year. While the increase will largely affect foreign travellers, AOT insisted that it will not reduce the number of international visitors to Thailand. The chief executive officer of AOT, Paweena Jariyathitipong, told local media that the PSC for passengers departing on international…

-

AirAsia leaves 23 passengers behind on bus, cites miscommunication

AirAsia issued an apology following a coordination issue that left 23 passengers on a shuttle bus during boarding for a domestic flight from Bangkok to Hat Yai. The incident occurred on January 17 on Flight FD3116, which was scheduled to depart Don Mueang International Airport at 7.10am. A passenger later described the situation in a Facebook post published on January…

-

Thailand considers invitation to join US-led Gaza peace board

Thailand is reviewing an invitation from US President Donald Trump to join a proposed international ‘Board of Peace’ aimed at resolving the conflict in Gaza, according to the Ministry of Foreign Affairs today, January 19. The initiative, part of Trump’s proposed Comprehensive Plan to End the Gaza Conflict, outlines the creation of a peace board initially focused on Gaza, with…

-

Thai foreign minister seeks clarity on US immigrant visa halt

Thailand’s foreign minister met with a top United States diplomat in Bangkok today, January 15, to seek clarification over Washington’s recent decision to suspend immigrant visa processing for 75 countries, including Thailand. Foreign Affairs Minister Sihasak Phuangketkeow said he had summoned the US chargé d’affaires in Thailand to discuss the move, after Thailand appeared on a newly released list of…

-

Thailand among 75 nations hit by US visa processing suspension

The United States will temporarily suspend immigrant visa processing for Thailand and 74 other countries, effective January 21, 2026, as part of tighter immigration screening under its America First policy, the US State Department announced on January 14. The immigration visa freeze targets applicants from countries where migrants are said to disproportionately rely on public assistance after settling in the…

-

What’s happening in Iran? How hunger protests turned into a fight to topple the Islamic Republic.

Iran is currently cut off from the outside world. The government has severed internet and telephone lines nationwide, hoping to blind and deafen the international community to the brutality of its crackdown. Yet, the screams of frustration from the streets echo far beyond the digital blackout. This nationwide uprising is a clear signal: the 40-year-old theocratic regime is facing its…

-

New ‘Republic of Kawthoolei’ announced at Thai-Myanmar border

The son of a former leader of the Karen National Union (KNU) declared independence and announced the establishment of the Republic of Kawthoolei, a self-proclaimed state located along the Thai–Myanmar border. Nerdah Mya, the son of former KNU leader Bo Mya, introduced himself as the first president of the Republic of Kawthoolei, meaning “the land without darkness” in Karen, on…

-

Cambodia accused of covering up Angkor Wat YouTuber assault

A travel YouTuber, known as The Country Collectors, who was assaulted at Cambodia’s Angkor Wat, posted a statement yesterday, January 7, that the Cambodian government has now attempted to cover up the story. In his statement posted on social media, the YouTuber said the APSARA National Authority, responsible for overseeing Angkor Archaeological Park, released an official response to the story.…

-

Missing Chinese influencer found injured and begging in Cambodia

A Chinese influencer was found injured and begging for money in Cambodia after losing contact with her family since December 26 last year. Images of a Chinese woman carrying an X-ray film circulated widely on Cambodian and Thai social media recently. The woman was seen wearing a white mini dress with a tweed blazer, glasses, and a headband worn across…

-

New fraud center compound found in Cambodia, 50 km from Poipet

Authorities have identified a new large-scale scam compound operating in Cambodia, around 50 kilometres from Poipet, raising fresh concerns over the regional spread of online fraud networks targeting victims worldwide. The Anti-Online Fraud Center, known as ACSC, confirmed the discovery on January 5, 2026, warning that criminal syndicates are rapidly relocating and expanding after recent coordinated crackdowns in Thailand, China,…

-

Thailand calls for restraint amid US-Venezuela tensions

Thailand’s Ministry of Foreign Affairs yesterday, January 4, issued a statement calling for restraint amid escalating tensions between the United States and Venezuela. The US launched a military strike across northern Venezuela on Saturday, January 3, under an operation named Operation Absolute Resolve. During the raid, Venezuelan President Nicolás Maduro and his wife, Cilia Flores, were captured following allegations that…

-

Royal Thai Navy detains 67 Cambodian migrants attempting illegal border crossing into Thailand

The Royal Thai Navy has detained 67 Cambodian migrant workers who attempted to illegally cross the Thai border in Chanthaburi province, authorities confirmed on January 3, 2026. The group included men, women, and children who officials said were fleeing severe economic hardship, unemployment, and food insecurity in their home communities. Rear Admiral Parach Rattanachaiyapan, spokesperson for the Royal Thai Navy,…

-

Explosion at Swiss Bar in Crans-Montana Kills at Least 40

Swiss Ski Resort Tragedy Explosion at Crans-Montana Bar Kills ‘Dozens,’ Italy Reports 40 Dead CRANS-MONTANA — A devastating explosion followed by a massive fire at a popular bar in the renowned Swiss ski resort of Crans-Montana has resulted in a “mass casualty” event, sending shockwaves across Europe. Swiss police confirmed “dozens” of fatalities, while Italian authorities report the death toll…

-

“I Paid For It!” Chinese guest fumes after being stopped from packing buffet food

Mother and Son Cause Scene at Hotel Buffet Over ‘No Takeaway’ Ban, Threaten Staff SUZHOU — A heated altercation erupted at a hotel in Suzhou, Jiangsu Province, after a mother and son were stopped from packing food from the breakfast buffet to take home. it escalated into a physical confrontation after the guests refused to comply with hotel regulations. Witnesses…

-

Thai and Cambodian diplomats meet in China to reinforce ceasefire and border stability

Top diplomats from Thailand and Cambodia met in China on Sunday for high-level talks aimed at reinforcing a fragile ceasefire and preventing a return to fighting along their disputed border, as Beijing steps up its role as a regional mediator. The meetings follow the signing of a new ceasefire agreement intended to halt weeks of clashes that have killed more…

-

Trump says Thailand-Cambodia peace achieved with ‘very little assistance’ from UN

US President Donald Trump said yesterday, December 28, that Thailand and Cambodia returned to peace following a recent ceasefire agreement, claiming the outcome was achieved with “very little assistance” from the United Nations (UN). Trump made the statement on his social media platform, Truth Social, shortly after the ceasefire agreement between Thailand and Cambodia was reached on Saturday, December 27. In…

-

Thailand agrees to ceasefire, rejects pre-clash position return

Thailand says it is prepared to enter a short ceasefire with Cambodia but will not accept any deal that requires Thai forces to retreat from areas they have secured during the latest border clashes. Prime Minister Anutin Charnvirakul said the National Security Council has approved a 72-hour ceasefire proposal and authorized Defense Minister Nattaphon Narkphanit to represent Thailand at talks…

-

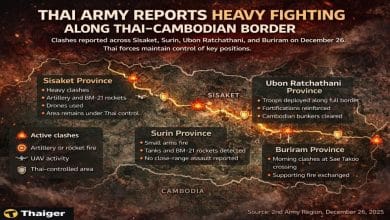

Thai army reports heavy clashes in Sisaket as border tensions stay high

The 2nd Army Region has released its latest summary of fighting along the Thai-Cambodian border, saying tensions remained high on December 26, particularly in Sisaket province, where heavy exchanges of fire were reported throughout the day. According to the army, clashes were concentrated across multiple points along the border, but Thai forces retained control of all strategic areas. In Ubon…

-

Thai tutor confronts foreign man shouting ‘Ni Hao’ and urges others to speak up

A Thai English-language tutor and social media influencer shared her experience of confronting a foreign man who shouted “Ni Hao” at her, urging others not to stay silent when faced with similar behaviour. The influencer, Warinthorn “Ann” Euawasinthon, widely known as Kru P’Ann, is the founder of Learnovate and a popular online educator. She has more than 1.7 million followers…

-

South Korean celebrity Hwang Hana returns home and surrenders to drug charges

A South Korean celebrity, Hwang Hana, who was wanted under an Interpol Red Notice, returned to her home country yesterday, December 24, and surrendered to authorities to face legal proceedings over drug-related offences. The 37 year old celebrity is widely known as the granddaughter of the founder of Namyang Dairy Products, one of South Korea’s major food companies. She is…

-

Malaysian woman allegedly lives double life after secret marriage in Songkhla

A Malaysian woman called on religious authorities to take action after discovering that her brother’s wife had secretly married another man in Songkhla province of Thailand and lived a double life for more than a year. The woman, identified as Ekin Derahim, shared details of the alleged affair on her Facebook account on December 11. In her post, she also…

-

Trump reclassifies cannabis in major US drug policy shift

US President Donald Trump has ordered a change to federal drug regulations, signing an executive order to downgrade cannabis from a Schedule I to a Schedule III narcotic, the most significant shift in American drug policy in decades. The reclassification would place cannabis in the same category as ketamine, anabolic steroids, and Tylenol with codeine, substances considered to have legitimate…

-

US trade deal on hold as tariffs could rise if border conflict drags on

A senior official at Thailand’s Ministry of Commerce has warned that any trade deal with the United States cannot be finalized until a new parliament and cabinet are in place, as concerns grow that Washington could raise tariffs if fighting between Thailand and Cambodia is not resolved. Speaking on Friday, Chotima Iamsawadikul, director-general of the Department of International Trade Negotiations,…

-

ASEAN steps in as Thailand-Cambodia border war tests regional unity

The Association of Southeast Asian Nations (ASEAN) foreign ministers will meet in Malaysia on Monday in a renewed attempt to stop escalating violence along the Thailand-Cambodia border, as clashes spread across disputed territory and humanitarian pressures intensify. The talks in Kuala Lumpur come after weeks of fighting that have left at least 40 people dead and forced more than 500,000…

-

Thai and Cambodian Airport Passenger Numbers Drop Despite Open Flights

Tensions between Thailand and Cambodia have spilled into the aviation sector, with airport authorities in both countries trading accusations even as flight connections remain open and operational. At the center of the dispute are allegations from Cambodian officials that passengers transiting through Bangkok face repeated delays and baggage problems, claims Thai authorities strongly deny. This week, Airports of Thailand rejected…

-

6 Thai masseuses arrested for prostitution during raid on spa in Taiwan

Police in Taiwan raided a spa in Tainan City and arrested six Thai masseuses for allegedly providing illegal sex services. A Taiwanese customer was also arrested after being caught engaging in sexual activity during the operation. Officers from Shanhua Police Station carried out the raid on December 10 after receiving a tip-off that the spa was secretly operating as a brothel.…

-

Trip.com halts cooperation with Cambodia amid privacy concerns

Online travel agency Trip.com cancelled its cooperation agreement with Cambodia’s tourism authorities after Chinese and Thai users reportedly expressed fears that their personal information could be leaked or misused. According to a recent report by Chinese local news outlet ST Headline, Trip.com had entered into a tourism promotion partnership with Cambodia’s National Tourism Authority (NTA). The agreement was signed on December…

-

Israel confirms identity of Thai hostage’s body from Gaza

The Israeli Prime Minister’s office has confirmed that the Red Cross handed over the body of Thai national Sudthisak Rintalak on Wednesday, December 3. Only one deceased hostage remains in Gaza, 24 year old Ran Gvili, an Israeli police officer. Sudthisak and Gvili were the final two hostages who had not been recovered from the territory. The Palestinian Islamic Jihad’s (PIJ)…

Broke? Find employment in Southeast Asia with JobCute Thailand and SmartJob Indonesia. Rich? Invest in real estate across Asia with FazWaz Property Group or get out on a yacht anywhere with Boatcrowd. Even book medical procedures worldwide with MyMediTravel, all powered by DB Ventures.