Facebook mistakenly banning ok content a growing problem

Language and expression have nuances, subtleties, and variety in meaning, both explicitly and implicitly stated. The problem is, Facebook often times does not. Society is facing an increasingly conspicuous problem of Facebook and other social media platforms using algorithms to block prohibited content while lacking any useful channels to rectify mistakes.

Many people have experienced getting muted or banned temporarily or permanently from a social media platform without having any idea of what they did wrong, or for violations of the terms of service that don’t actually violate any terms. And when a social media platform has grown so large and crucial in the life of people and even businesses, having no recourse or avenue to seek help about what got you blocked can have a devastating effect on livelihoods and lives.

While Facebook claims that this is a very rare occurrence, on a social media platform so large even a rare occurrence can affect hundreds of thousands of people. A problem that affects even one-tenth of 1% of the active users on Facebook would still be felt by nearly 3 million accounts. The Wall Street Journal recently estimated that, in blocking content, Facebook likely makes about 200,000 wrong decisions per day.

People have been censored or blocked from the platform because their names sounded too fake. Ads for clothing disabled people we removed buy algorithms that believed they were breaking the rules and promoting medical devices. The Vienna Tourist Board had to move to adult content friendly site OnlyFans to share works of art from their museum after Facebook removed photos of paintings. Words that have rude popular meanings but other more specific definitions in certain circles – like “hoe” amongst gardeners, or “cock” amongst chicken farmers or gun enthusiasts – can land people in the so-called “Facebook jail” for days or even weeks.

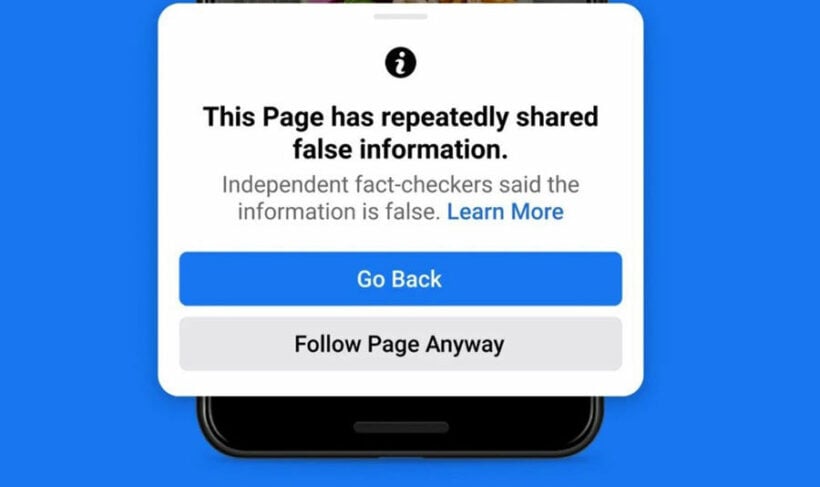

Facebook often errs on the side of caution to block money scams, medical disinformation, incitement of violence, or the perpetuation of sexual abuse or child endangerment. But when they make mistakes, Facebook does very little to right the wrongs. Experts say Facebook could do a lot more to alert users why a post was deleted or why they got blocked, and provide clear processes to appeal erroneous decisions that actually elicit a response from the company.

Facebook doesn’t allow outsiders access to their data on decision-making regarding errors, citing user privacy issues but the company says it spends billions of dollars on staff and algorithms to oversee user output. Even their own semi-independent Facebook Oversight Board says they aren’t doing enough though. But with little consequence for their errors, they have little incentive to improve.

A professor at the University of Washington Law School compared Facebook to construction companies tearing down a building. The laws in the US hold demolition companies to high accountability, ensuring safety precautions in advance and compensation for damage should it occur. But giant social media companies face no such accountability that holds them to task for restricting – or allowing – the wrong information.

SOURCE: Bangkok Post

Latest Thailand News

Follow The Thaiger on Google News: