AI extinction risk warning backed by OpenAI, DeepMind chiefs

The potential threat of artificial intelligence (AI) causing human extinction has been highlighted by numerous experts, including the leaders of OpenAI and Google DeepMind. They have endorsed a statement on the Centre for AI Safety’s website, which calls for mitigating the risk of extinction from AI, placing it alongside other global priorities such as pandemics and nuclear war. However, some experts argue that these concerns are exaggerated.

Sam Altman, CEO of ChatGPT-developer OpenAI, Demis Hassabis, CEO of Google DeepMind, and Dario Amodei of Anthropic are among those who have backed the statement. The Centre for AI Safety’s website outlines various potential disaster scenarios, and Dr Geoffrey Hinton, who previously warned about the dangers of super-intelligent AI, has also supported their call. Yoshua Bengio, a computer science professor at the University of Montreal, has signed as well. Dr Hinton, Prof Bengio, and NYU Professor Yann LeCun are often referred to as the “godfathers of AI” due to their pioneering work in the field, which earned them the 2018 Turing Award for outstanding contributions to computer science.

Nonetheless, Prof LeCun, who also works at Meta, considers these apocalyptic warnings to be overblown. He expressed on Twitter that “the most common reaction by AI researchers to these prophecies of doom is face palming”. Many other experts similarly believe that fears of AI annihilating humanity are unrealistic, and that they distract from issues like bias in systems that are already problematic.

Arvind Narayanan, a computer scientist at Princeton University, has previously told the BBC that science fiction-like disaster scenarios are not feasible: “Current AI is nowhere near capable enough for these risks to materialise. As a result, it’s distracted attention away from the near-term harms of AI”.

Elizabeth Renieris, senior research associate at Oxford’s Institute for Ethics in AI, expressed her concerns about the more immediate risks to BBC News. She said that advancements in AI could “magnify the scale of automated decision-making that is biased, discriminatory, exclusionary or otherwise unfair while also being inscrutable and incontestable”. This would lead to an increase in misinformation, eroding public trust, and exacerbating inequality, particularly for those on the wrong side of the digital divide.

However, Dan Hendrycks, director of the Centre for AI Safety, argued that future risks and present concerns “shouldn’t be viewed antagonistically”. He explained that addressing current issues can be useful for managing many of the future risks.

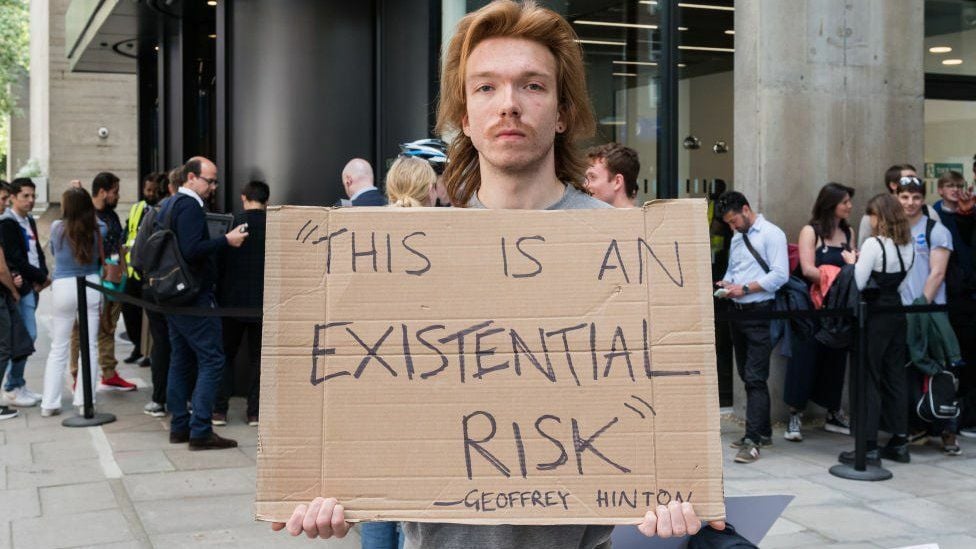

Media coverage of AI’s alleged “existential” threat has escalated since March 2023 when experts, including Tesla CEO Elon Musk, signed an open letter calling for a halt in the development of the next generation of AI technology. The new campaign features a brief statement aimed at sparking discussion and compares the risk to that posed by nuclear war. OpenAI recently proposed in a blog post that superintelligence might be regulated similarly to nuclear energy: “We are likely to eventually need something like an IAEA [International Atomic Energy Agency] for superintelligence efforts”, the company wrote.

Both Sam Altman and Google CEO Sundar Pichai have recently discussed AI regulation with the prime minister. In response to the latest warning about AI risks, Rishi Sunak emphasised the benefits to the economy and society, while acknowledging the need for safety and security measures. He reassured the public that the government is carefully examining the issue and has discussed it with other leaders at the G7 summit. The G7 has recently established a working group on AI.

Latest Thailand News

Follow The Thaiger on Google News: